(© Supertrooper - stock.adobe.com)

STANFORD, Calif. — In a world where our every move seems to be tracked, analyzed, and categorized, it might feel like there's little about ourselves that remains truly private. But what if even our most basic physical features, like the shape of our face or the size of our nose, could reveal intimate details about who we are and what we believe? A groundbreaking new study suggests that artificial intelligence (AI) may be able to do just that - predict our political orientation from nothing more than an expressionless photograph.

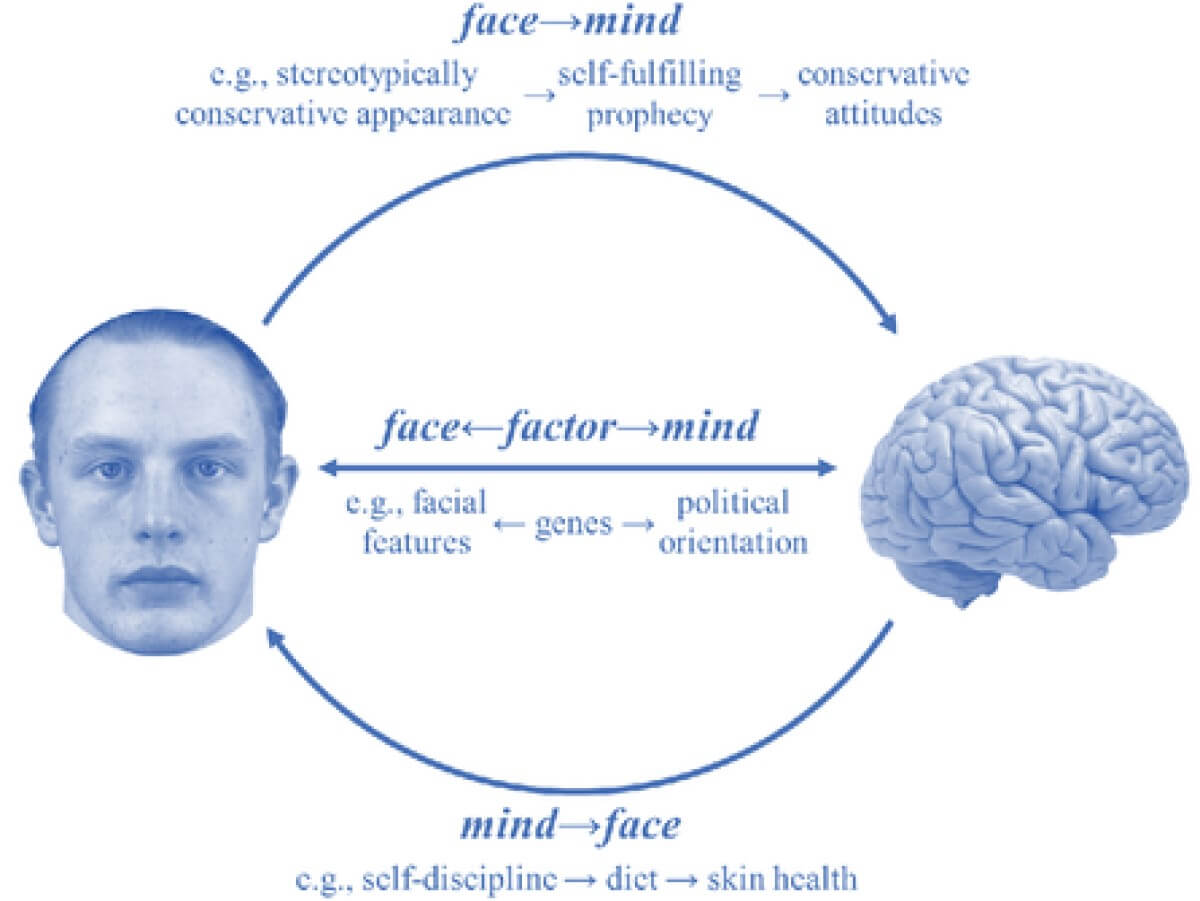

The research, led by Michal Kosinski at Stanford University and published in American Psychologist, set out to investigate whether there's a link between our facial structure and our political beliefs. To do this, they recruited a diverse group of participants and took carefully standardized photos of their faces, controlling for factors like lighting, head orientation, and facial expression. They also had participants fill out detailed questionnaires about their political views, placing them on a spectrum from liberal to conservative.

Next, the researchers fed these expressionless facial images into a sophisticated AI algorithm known as a deep neural network. This type of AI is modeled loosely on the human brain, with layers of interconnected “neurons” that can learn to recognize patterns and make predictions. In this case, the AI was tasked with finding any correlations between the subtle physical variations in people's faces and their self-reported political orientation.

Remarkably, the AI was able to predict political orientation with a high degree of accuracy, even after accounting for factors like age, gender, and ethnicity. In fact, the algorithm's predictions were about as reliable as those made by human raters who were shown the same facial images. This suggests that there are indeed subtle cues in our facial structure that can give away our political leanings, even when we're not expressing any emotion.

So, what exactly is the AI picking up on? The researchers found that the key seems to lie in the size and shape of certain facial features, particularly in the lower part of the face. Conservatives, for instance, tended to have slightly wider jaws and larger chins compared to liberals. These differences are not the sort of thing that the human eye would easily pick up on, but they were enough for the AI to detect a pattern.

It's important to note that this doesn't mean your political views are written all over your face for the world to see. The differences the AI is detecting are extremely subtle, and it still gets it wrong a significant portion of the time. Think of it more like an educated guess than a foolproof prediction.

However, even an educated guess about something as personal as political belief can feel unsettling in an age of increasing surveillance and data collection. If AI can infer our politics from a simple photograph, what else might it be able to learn about us? Our personality traits? Our sexual orientation? Our risk of developing certain diseases?

These are the sorts of questions that are becoming increasingly urgent as facial recognition technology becomes more sophisticated and more widespread. Already, these systems are being used for everything from unlocking our smartphones to tracking our movements in public spaces. In China, facial recognition is even being used to publicly shame jaywalkers and rate citizens' trustworthiness.

As the Stanford study shows, the capabilities of this technology may go far beyond simple identification. By analyzing the subtle patterns in our facial features, AI could potentially make all sorts of inferences about our inner lives and identities. This raises profound questions about privacy, consent, and the misuse of our biometric data.

So what can be done? The researchers stress the need for tighter regulations around the collection and use of facial images, particularly by companies and governments. They also call for more public awareness and debate around these issues so that we can collectively decide what uses of this technology are acceptable and what crosses the line.

At the end of the day, our faces may reveal more about us than we realize - or would like. As AI grows ever more insightful, the challenge will be to ensure that this knowledge is used to empower and protect us, not to exploit or manipulate. Because while we may not be able to control every aspect of our appearance, we should still have a say in how it's interpreted and used by others. Our faces, after all, belong to us.