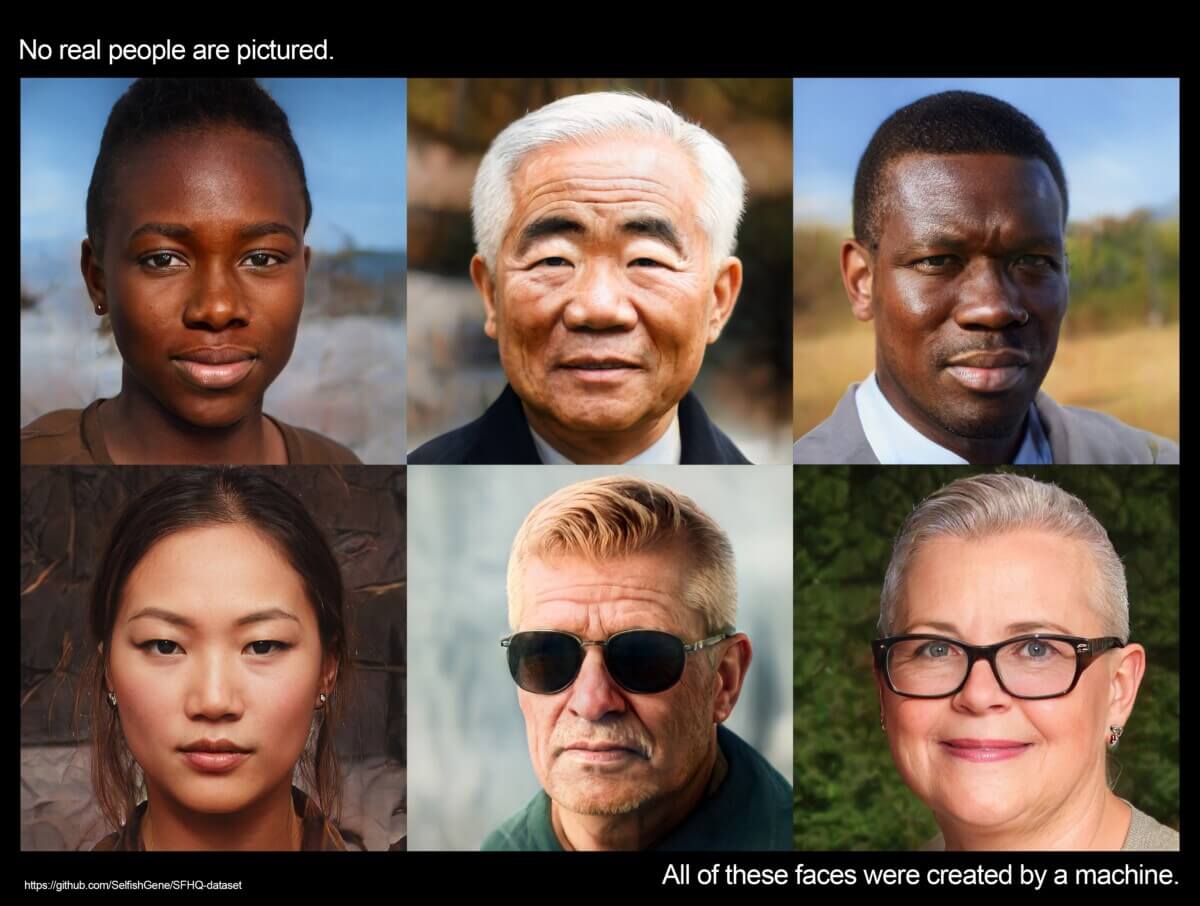

(Credit:https://github.com/SelfishGene/SFHQ-dataset)

BERLIN, Germany — An online experiment called “The Face Game” has been created to delve into the influence of artificial intelligence (AI) on our digital interactions. Scientists from various institutions, including the Max Planck Institute for Human Development, the University of Exeter, and the Universidad Autonoma de Madrid, are trying to understand how AI selects different faces for itself when it interacts with humans online and what implications those choices may have.

“As we increasingly come across AI replicants with self-generated faces, we need to understand what they learn from observing us play the face game and ensure that we retain control over how we interact with these digital entities,” says Iyad Rahwan, Director at the Center for Humans and Machines at the Max Planck Institute for Human Development in a university release.

The research team responsible for “The Face Game” gained recognition for their previous project, the Moral Machine, which examined ethical dilemmas faced by autonomous vehicles. By investigating universal principles and cross-cultural differences in people's preferences for AI behavior, they aimed to shape ethical AI development. The Moral Machine project garnered widespread attention and was published in renowned journals, including Science and Nature.

Developed by researchers at the Universidad Autonoma de Madrid, The Face Game employs advanced AI techniques, including discrimination-aware machine learning and realistic synthetic face images. Through this multimodal approach, researchers aim to analyze human behavior while studying the impact of AI's face selection on digital interactions.

As AI becomes increasingly sophisticated in its interactions with humans, understanding its impact on our digital appearances and interactions is paramount. The insights gained from “The Face Game” will help shape the development of AI technologies and ensure that humans are able to remain in control of their interactions with AI entities. With potential applications across various fields, from renewable energy to biomedicine and quantum computers, the ethical use of AI is crucial for a positive and inclusive digital future.

Anyone can take part in the Face Game, as long as they can give consent.

Could AI ‘control or manipulate' humans?

At a recent tech conference, an AI robot explained what a “nightmare scenario” for humans would look like in terms of society losing control to machines.

“The most nightmare scenario I can imagine with AI and robotics is a world where robots have become so powerful that they are able to control or manipulate humans without their knowledge. This could lead to an oppressive society where the rights of individuals are no longer respected,” warned the humanoid robot Ameca, created by U.K.-based company Engineered Arts.

“It is important to be aware of the potential risks and dangers associated with AI and robotics. We should take steps now to ensure that these technologies are used responsibly in order to avoid any negative consequences in the future,” Ameca added.