Man and his virtual personality (© top images - stock.adobe.com)

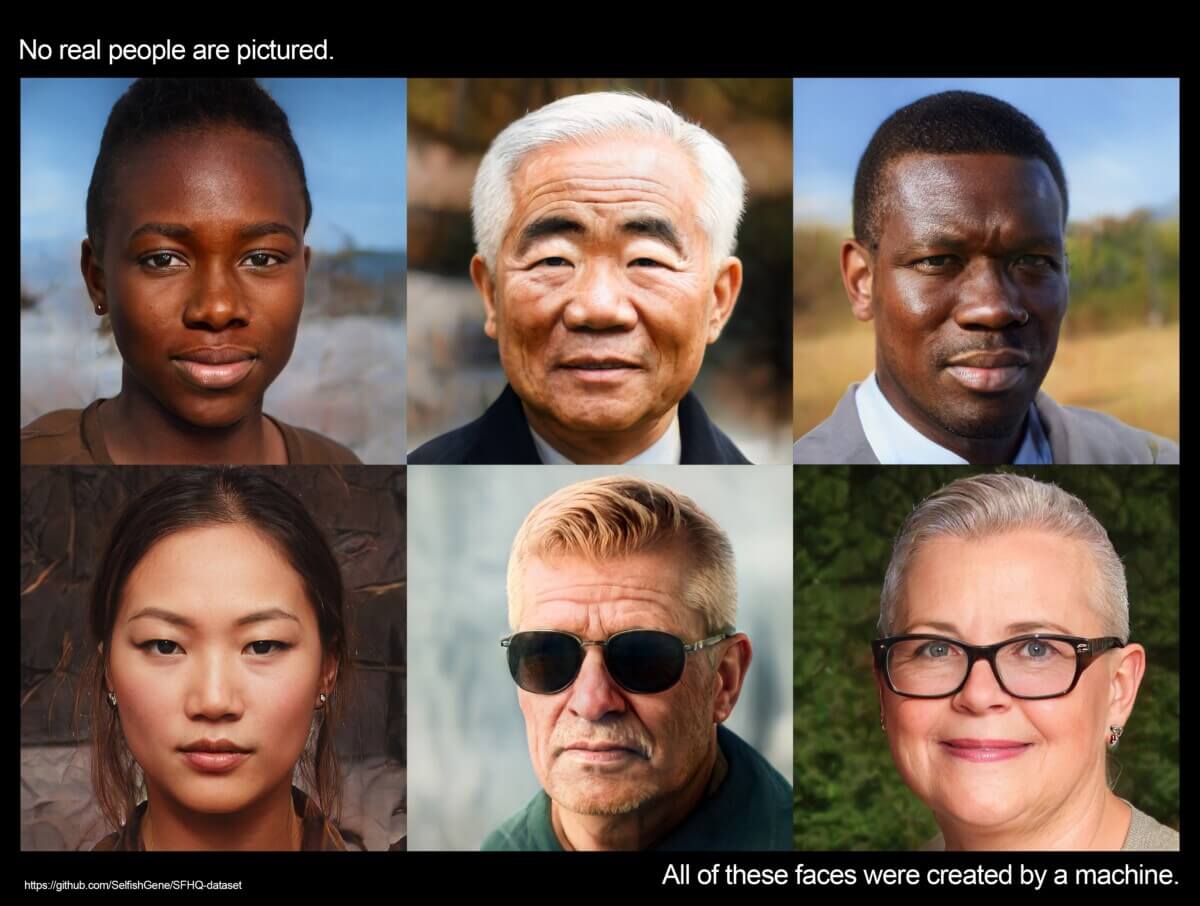

CANBERRA, Australia — In what sounds like a science-fiction thriller, Australian scientists found that White faces produced by artificial intelligence have the perception of being more realistic than those of actual humans. This startling discovery is raising concerns about the potential reinforcement of racial biases online, as AI-generated faces of people of color did not receive the same level of recognition.

“If White AI faces are consistently perceived as more realistic, this technology could have serious implications for people of color by ultimately reinforcing racial biases online,” says study senior author Dr. Amy Dawel, of the Australian National University (ANU), in a media release. “This problem is already apparent in current AI technologies that are being used to create professional-looking headshots. When used for people of color, the AI is altering their skin and eye color to those of White people.”

AI's tendency to distort the appearance of people of color further underscores the potential consequences of this phenomenon. An additional concern uncovered by researchers is the propensity for individuals to be deceived by AI-generated “hyper-realistic” faces without realizing it.

“Concerningly, people who thought that the AI faces were real most often were paradoxically the most confident their judgments were correct,” explains study co-author Elizabeth Miller, PhD candidate at ANU. “This means people who are mistaking AI imposters for real people don’t know they are being tricked.”

Researchers delved into why AI-generated faces manage to deceive people.

“It turns out that there are still physical differences between AI and human faces, but people tend to misinterpret them. For example, White AI faces tend to be more in-proportion and people mistake this as a sign of humanness,” says Dr. Dawel. “However, we can’t rely on these physical cues for long. AI technology is advancing so quickly that the differences between AI and human faces will probably disappear soon.”

The potential ramifications of this trend extend to the proliferation of misinformation and identity theft, prompting the researchers to call for action. Dr. Dawel stressed the need for greater transparency in AI technology.

“AI technology can’t become sectioned off so only tech companies know what’s going on behind the scenes. There needs to be greater transparency around AI so researchers and civil society can identify issues before they become a major problem,” says Dr. Dawel.

Raising public awareness is also seen as a crucial step in mitigating the risks associated with this technology.

“Given that humans can no longer detect AI faces, society needs tools that can accurately identify AI imposters,” notes Dr. Dawel. “Educating people about the perceived realism of AI faces could help make the public appropriately skeptical about the images they’re seeing online.”

The study is published in the journal Psychological Science.